Behavior-Based Robotics: A Brief Overview

What is behavior-based robotics (BBR)?

- Mataric' Definition:

Behavior-based robotics (BBR) bridges the fields of artificial intelligence, engineering, and cognitive

science. The behavior-based approach is a methodology for designing autonomous agents and robots; it is a type of intelligent agent architecture. Architectures supply structure and impose constraints on the way robot control problems are

solved. The behavior-based methodology imposes a general, biologically inspired, bottom-up philosophy, allowing

for a certain freedom of interpretation. Its goal is to develop methods for

controlling artificial systems (usually physical robots, but also simulated

robots and other autonomous software agents) and to use

robotics to model and better understand biological systems (usually

animals, ranging from insects to humans).

- Take a look at Mataric' article in the

MIT Encyclopedia of Cognitive

Science at COGNET

(cognet.mit.edu/MITECS/).

What are behaviors?

- Again from Mataric:

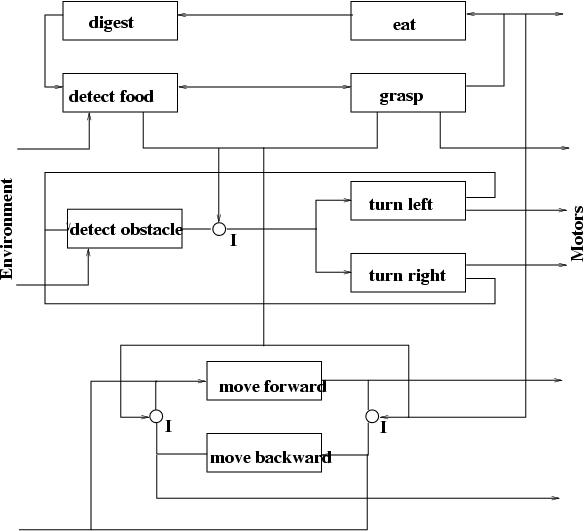

Behavior-based robotics controllers consist of a

collection of behaviors that achieve and/or maintain goals. For

example, "avoid-obstacles" maintains the goal of preventing collisions;

"go-home" achieves the goal of reaching some home destination. Behaviors are

implemented as control laws (sometimes similar to

those used in control theory), either in software

or hardware, as a processing element or a procedure. Each behavior can take inputs from the robot"s sensors (e.g.,

camera, ultrasound, infrared, tactile) and/or from other behaviors in the

system, and send outputs to the robot"s effectors (e.g., wheels, grippers,

arm, speech) and/or to other behaviors. Thus, a behavior-based

controller is a structured network of interacting behaviors.

- Motivation: compare to "finite-state machines (FSMs)" (e.g., the 2nd to

last sentence in the above quote)

- many architectures are actually direct variants of FSMs (e.g.,

subsumption,situated automata, and others)

- Need to implement control components that achieve "behaviors" and arrange

them in a control system so that the transitions between behaviors occur

according to specification, i.e., need an "architecture"

- Lots of different architectures out there, can be characterized by an

"architecture schema"

Architectures

- In general, architectures specify an arrangement of components (or

functional units) and how they are connected

- Consequently, architectures define what is commonly called "virtual

machine" in CS (e.g., a non-physical, "software" machine with interacting

"software" parts)

- Main way to "carve up" architecture space: reactive vs.

deliberative architectures

- Or better: deliberative vs. non-deliberative as "reactive"

has different meanings to different people:

- reactive as "stateless"

- reactive as "(tight) sensor-motor coupling" (no intermediary

processing)

- reactive as "simple"

- reactive as "non-representational"

- reactive as "fast, timely response"

- Hence, be very careful when you read the term "reactive", it may not

mean what you think it means!

- One (strong) characterization of deliberative architectures:they

have components to perform "what-if" reasoning

- need to be able to entertain representations of non-existent states

(among other things)

- Also possible: hybrid architectures that combine reactive and

deliberative "sub-architectures"

- Other dimensions of architectural variation (Fig. 1.10 in the Arkin book

is only partially useful):

- Arrangement of components

- e.g., components arranged in a "hierarchy" (e.g., where components on

higher levels dominate components on lower levels)?

- Flow of control

- e.g., sequential activation of components (cp. the "Omega" model by

Albus, 1981)

- Self-modifying vs. non-selfmodifying architectures

- e.g., architectures with learning mechanisms

- Functional dependence on external factors vs. independent

functioning

- e.g., a chess program vs. controller of a machine (think of the

"thermostat" controlling the blinker)

- Functions of components vs. emergent functions

- e.g., "wall-following" implemented in a special components vs.

emergent from other the functioning of other components

- Behavior arbitration

- e.g., centralized arbiter that decides which "behavior to execute"

Expressing/describing behaviors

- Need to capture our behavioral descriptions

(e.g., formalize them)

- Different ways of capturing them:

- stimulus-response diagrams

- (mathematical) functional notation

- finite state machines

- formal methods (e.g., robotic schemas,

situated automata, JAVA programs, etc.)

Implementing "Behaviors"

How can we enocde/implement "behaviors"?

- Remember: stimulus-response diagrams denote

functions, i.e., mappings f(S)->R (where S is the domain of stimuli and R

the domain of responses)

- Hence, in general a behavior is such

any mapping from possible stimuli to possible responses

- What are possible stimuli?

- depends on sensors (e.g., pixel images in the

case of cameras, a frequency spectrum for microphones, etc.)

- In general: a stimulus can be taken to be a

tuple <p,lambda>, where p denotes a particular

perceptual class and lambda denotes the intensity of the stimulus

- What are the responses?

- depends on effectors or actuators (e.g.,

motors, wireless transmitters, etc.)

- often expressed in terms of strength

and orientation for motors (think of vectors!)

- In general: if a motor response involves

physical movement, then it can be characterized by a six-tuple

<x,y,z,theta,phi,psi> (three

translational and three rotational degrees of freedom-an unconstrained

rigid object has six DOFs)

- Distinguish: holonomic from

non-holonomic (a "non-holonomic constraint" is a limitation on the

allowable velocities of an object)

- For both stimuli and response, a notion of

"strength" has to be defined

- Issue: what is the magnitude?

- Interesting dichotomies:

- discrete vs. continuous (in time and/or

space)

- analog vs. digital

- simple vs. complex

- structured vs. unstructured

- First Mantra of reactive BBR:

the presence of a stimulus is necessary, but not

sufficient to evoke a motor response in a BB robot.

- Hence, need a threshold tau

What is a behavioral mapping?

- Formally for motors:

f:(p,lambda)-><x,y,z,theta,phi,psi>

(where

<x,y,z,theta,phi,psi>=<0,0,0,0,0,0>

if lambda<tau)

- In addition: gains (to modify response

strength, also used to "integrate behaviors", will talk about this later)

- e.g.,

<a,b,c,d,e,f><x,y,z,theta,phi,psi>

(g=<a,b,c,d,e,f> is a

vector of scalars that modify the respective components of the response

r, put more succinctly: r'=gr)

- Note: f can be any function

- However: often used functions are

- constant (e.g., "move at a constant speed)

- "binary" threshold (e.g., "stop when wall is

encountered")

- linear (e.g., "move faster the farther you

are away from a wall")

- combinations of the above (e.g., "move at a

constant speed while you cannot sense a wall, once you sense it slow down

proportional to the distance to the wall, and stop once you reached a

critical threshold")

- Distinguish: discrete vs. continuous

responses

- discrete responses categorizes the sensory

space into discrete categories and map a particular response to each domain

(as in the above examples)

- continuous responses do not categorize

the sensory space, rather they establish "correlations" (or more to the

point: functional dependencies) between stimuli and responses--force

metaphor

- Problem: how to integrate two or more

behavioral mappings? (no issue if we only have one, e.g., as for toy problem

one)

- Need to think of ways how to achieve this

integration, since most likely we will have different behaviors that the

robot should exhibit at different times

- Subsequent issues: action selection

and behavior arbitration

Assembling and Implementing "Behaviors"

Discrete vs. continuous encoding of behaviors

- What exactly is the difference?

- First cut: finitely vs. potentially

infinitely many responses

- Note: infinitely many behavioral responses

are only possible, if sensory space is also infinite (not very likely!)

- Second cut: granularity of response

- Compare: "moving forward" (for some time)

vs. "moving forward one yard" or "moving forward for 10 seconds at speed

1/10 yard/sec"

- Third cut: architectural representation of

response

- In the former case, there is component for

"moving forward" that may or may not give rise to the specific "moving

forward one yard" (given other components and their interactions), whereas

in the latter there is a particular component for "moving forward one

yard"

- Different ways of discrete encodings:

- Condition-action rules: IF

perception/condition THEN action ENDIF

- E.g., Nilsons teleo-reactive rules (e.g.,

"forward at speed s"--e.g., as opposed to "forward one yard")

- Goal-reduction rules (Gapps, situated

automata): ACHIEVE condition/action DO action ACHIEVE

action/condition ... ENDACHIEVE

- Brook's Behavior Language: WHENEVER

condition &rest body-forms)

- Most so-called "agent architectures" specify

a finite set of "possible actions" (e.g., dynamic logic and various

logics used to describe the temporal behavior of systems)

- An agent is usually construed as a function

F:(Inputs,States)->(Actions,States)

(FSM!)

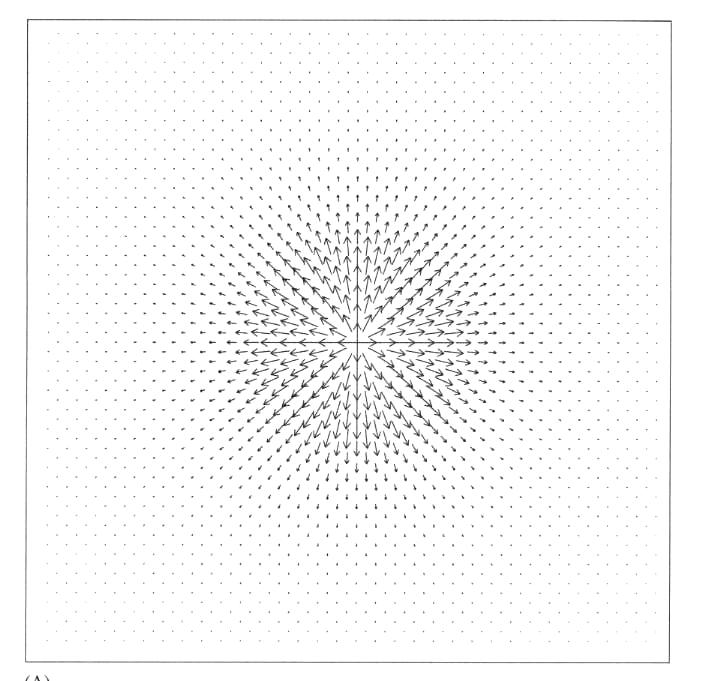

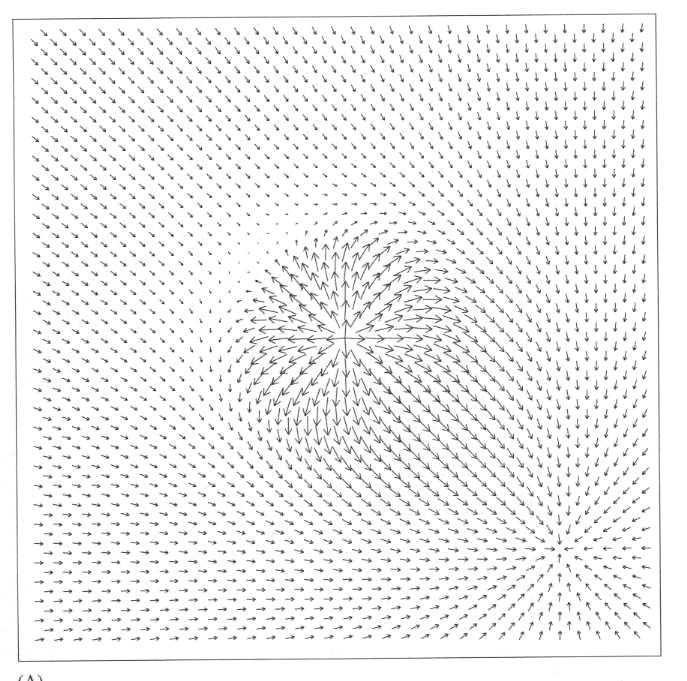

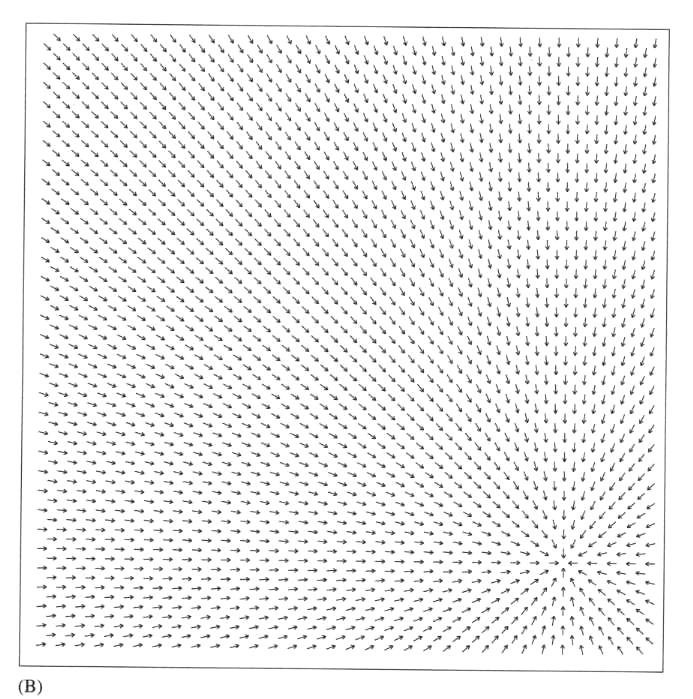

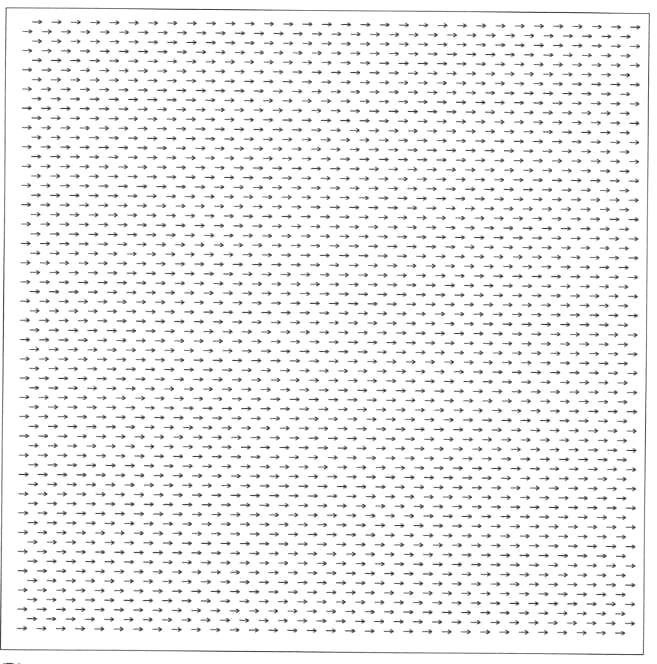

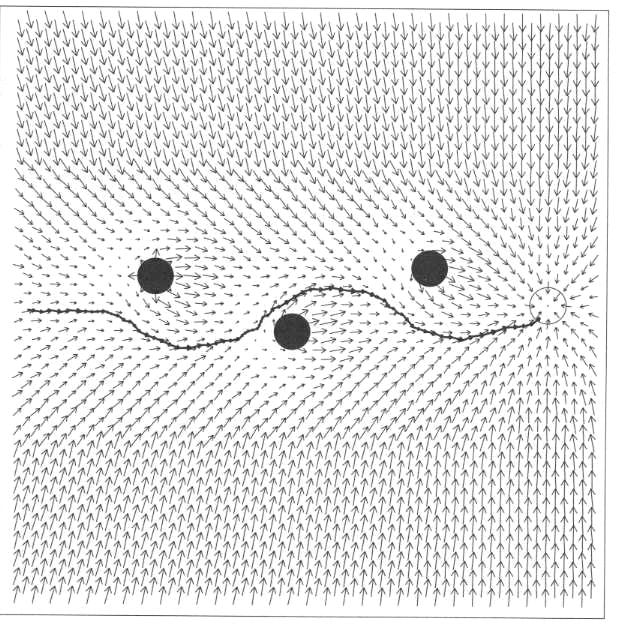

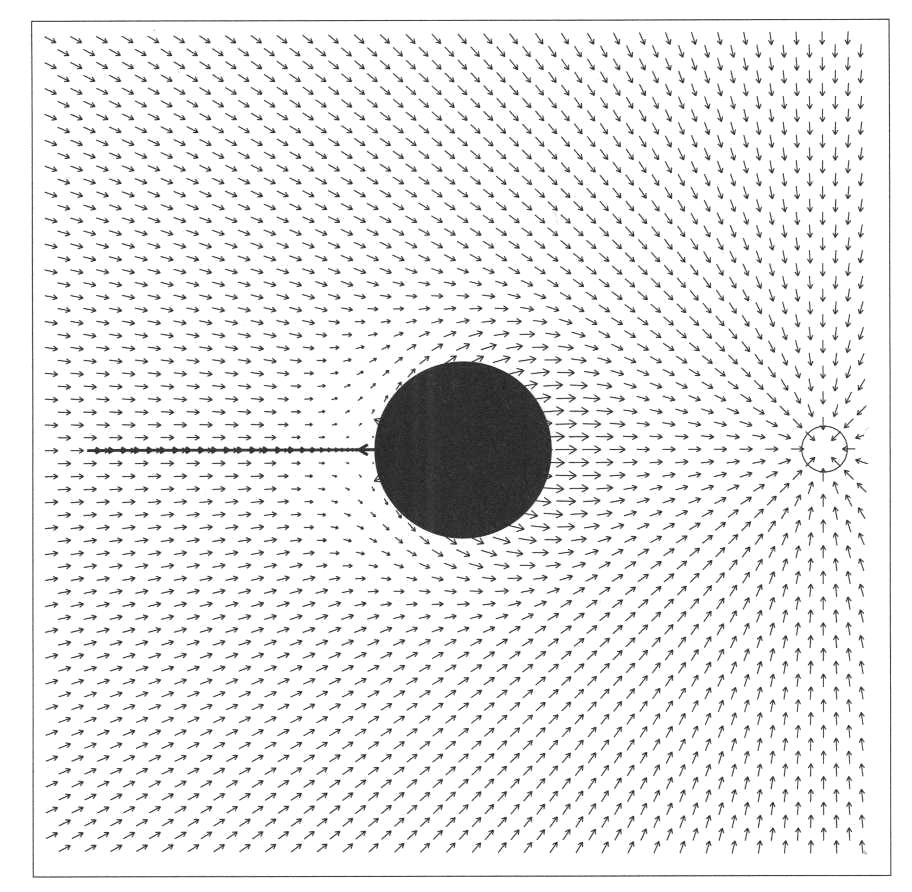

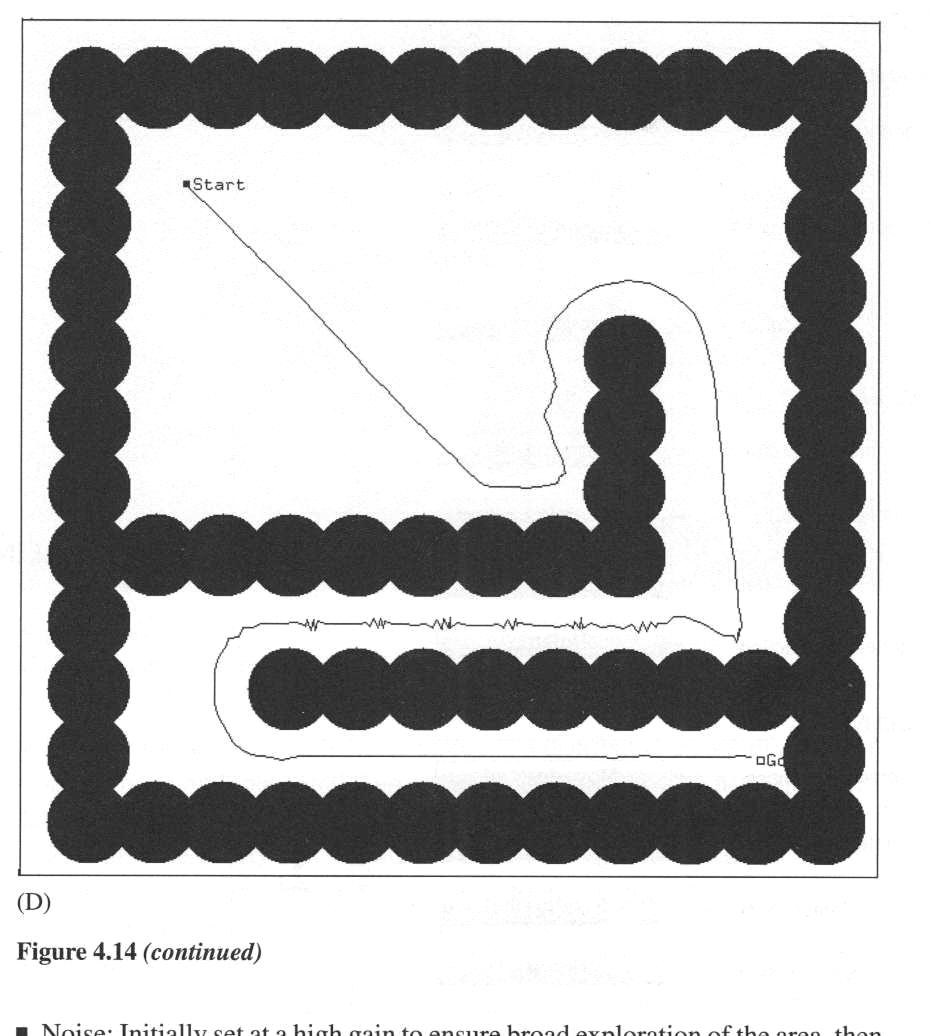

- Continuous encodings:

- Use continuous mapping from sensors to

motors, i.e., express relationship of what to do

- Commonly used: vector fields

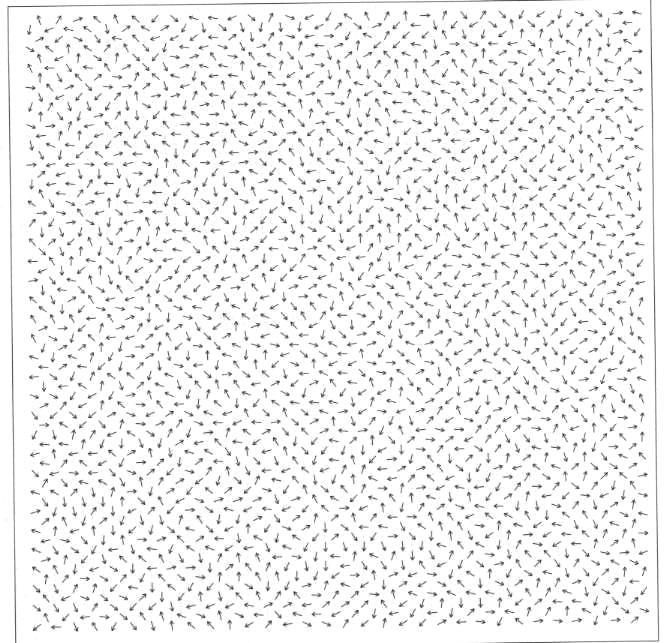

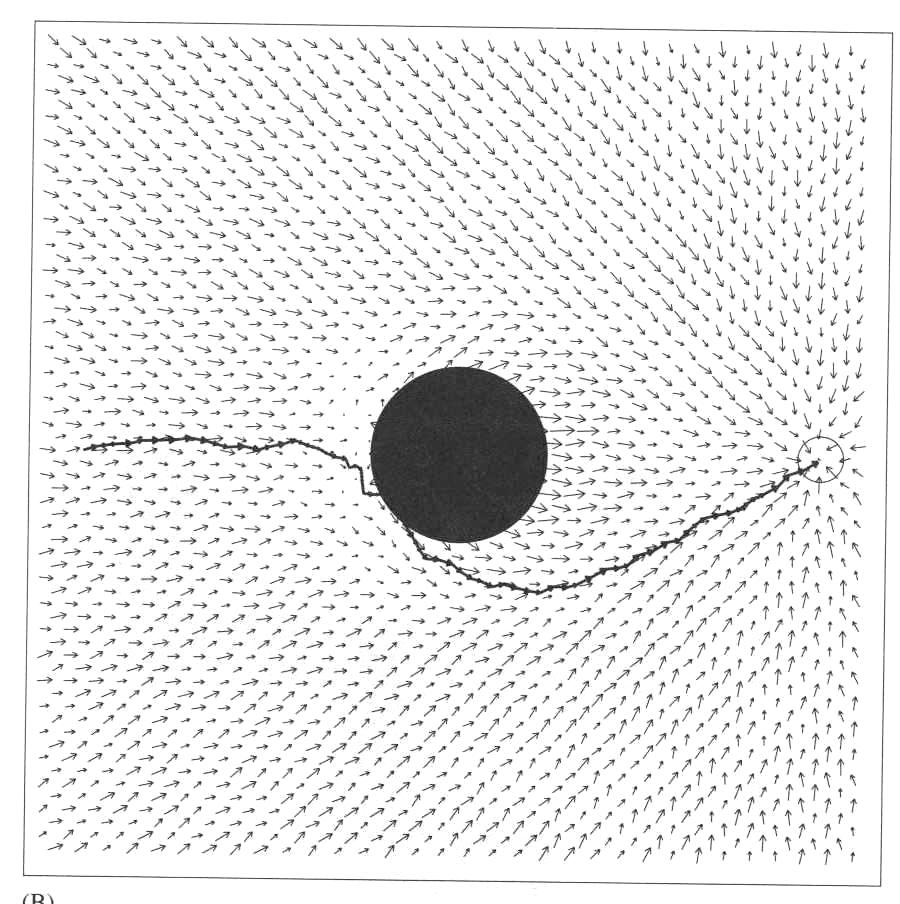

- Environment is construed as a vector space

where in each location a vector indicates the direction and strength of the

motor response (may have to do this for different motors)

- Vectors are used as "forces", where forces

are usually related to distance in space by the "inverse-square law":

force = 1/(distance*distance)

- Use: attractive and repulsive

forces to "classify" sensory stimuli (i.e., some stimuli will produce

attractive forces, others will produce repulsive forces)

Behavior coordination

- Need to integrate different behaviors to get

interesting system behavior

- Design issues:

- what is the overall behavior the system needs

to achieve

- how can it be broken down into components

- General case: given S (stimuli vector),

B (vector of all behavior functions), G (vector of gains

for each behavior), we get the response vector

R=G*B(S)

- Need to select one component of R, hence need a

"coordination" function C from vectors to scalars:

ro=C(R)

- C is also called "coordination

function (strategy)" or "action-selection function (mechanisms)"

- Different coordination/action-selection

methods:

- competitive

- priority-based arbitration (e.g., through

dominance hierarchies, where higher levels dominate or suppress lower

levels)

- winner-takes-it-all (e.g., the highest

activation of all behaviors gets exclusive control of the motors)

- direct competition (through excitation

and inhibition)

- voting for actions

- cooperative

- "field fusion" (remember: vector fields are

"additive")

- "desirability vectors" (e.g., try to find

action that maximizes the desirability values of the behaviors)

- Putting things together:

- parallel execution vs. sequencing of actions

- hierarchical vs. non-hierarchical

organization

- => architectures!

- Issue: emergence of behavior, very

tricky notion (often poorly presented and understood, for a good discussion,

see Wimsatt http://www.ageofsig.org/3M/archive/wimsatt.pdf)

Architecture Example: Subsumption

- Developed by Rodney Brooks in the Mid-1980ies

- Comes with a whole "philosophy" about how to

design robots: strongly opposed to the "sense-plan-act" loop of classical

robotics (remember Shakey?)

- Core features:

- anti-representationalist - "the world is its

best model" (Brooks 1991)

- anti-"deliberatist" - "planning is just a way

of avoiding figuring out what to do next" (Brooks 1987)

- anti-realist with respect to intelligence -

"intelligence is in the eye of the beholder" (Brooks 1991)

- emphasizes control systems that are

- simple

- cheap

- robust

- autonomous

- parallel

- hierarchical

- incrementally extensible

- keywords:

- Basic component: augmented finite state

machine (AFSM)

- Why augmented? Because there are additional

control mechanisms (e.g., for timing, resetting)

- Inhibition, suppression and

reset links (to inhibit inputs, suppress and replace outputs of

AFSMs, as well as to reset them)

- Subsumption architectures then consist in an

arrangement of layers, each of which contains one or more AFSMs

- Difficult to specify interactions between AFSMs

- for easier use: behavior language

(abstraction over AFSM)

- Behavior coordination is competitive:

- layered dominance hierarchy of AFSMs (i.e.,

higher ones dominate lower ones)

- Arbitration mechanisms between layers may use

any of the three links

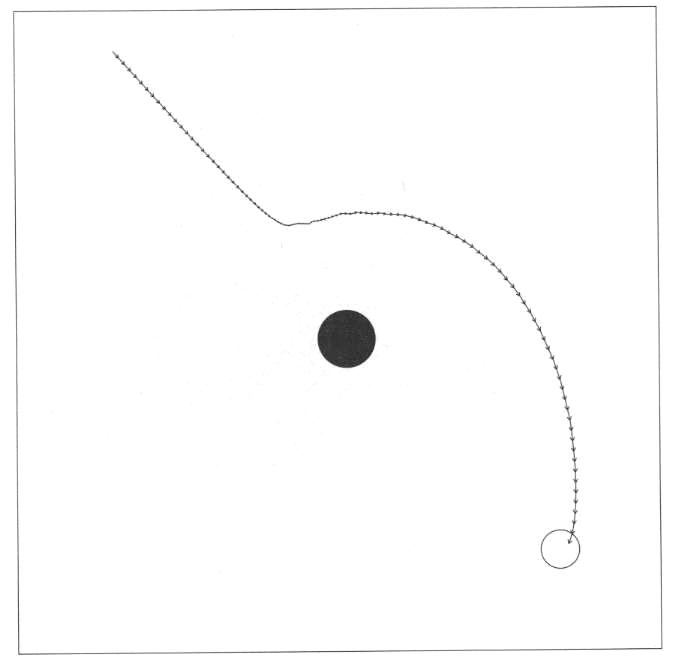

Architecture Example: Motor Schemas

- Arbib, Arkin, et al.

- Biologically inspired ("Schema theory", also:

motor control in insects)

- Reactive in nature (functional

sensory-motor mapping):

- uses force or potential fields to map sensory

space into motor space

- Cooperative behavior coordination (through

summation of fields!)

- Continuous response encoding

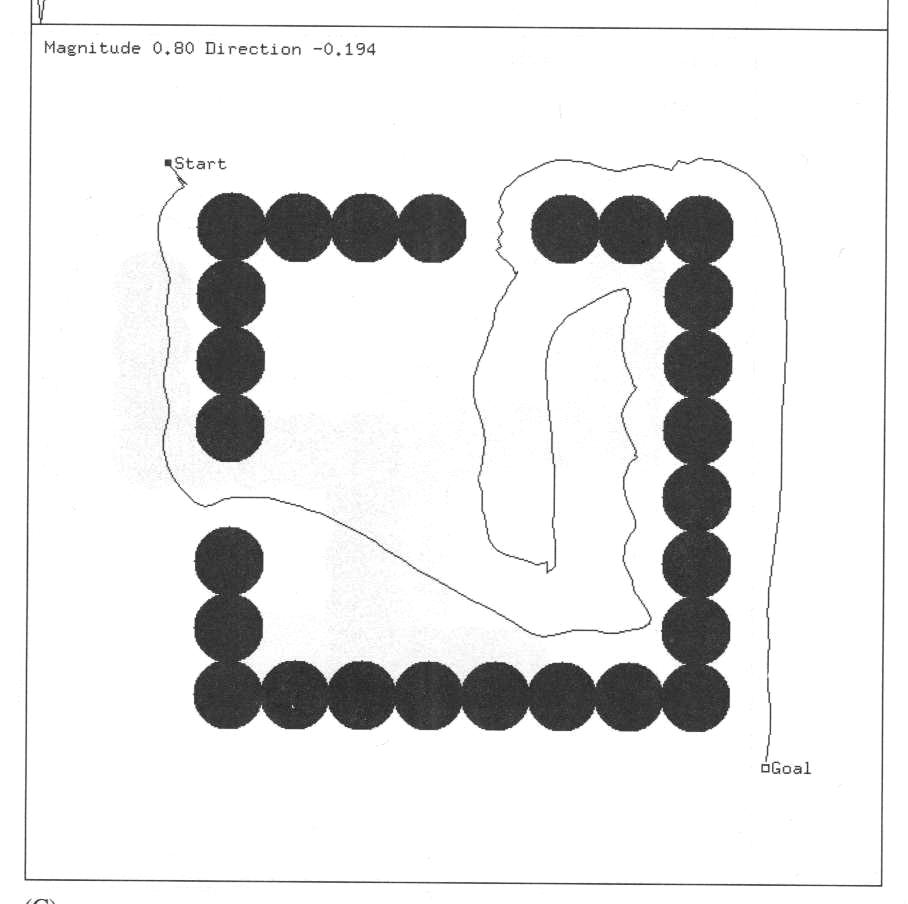

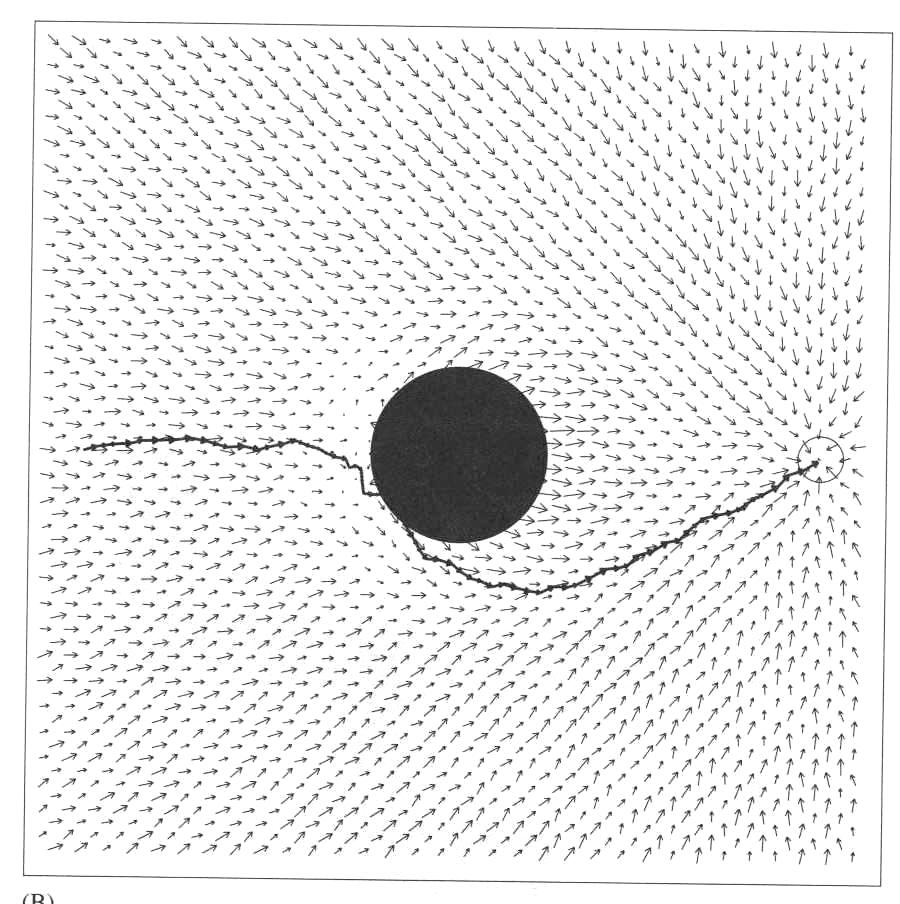

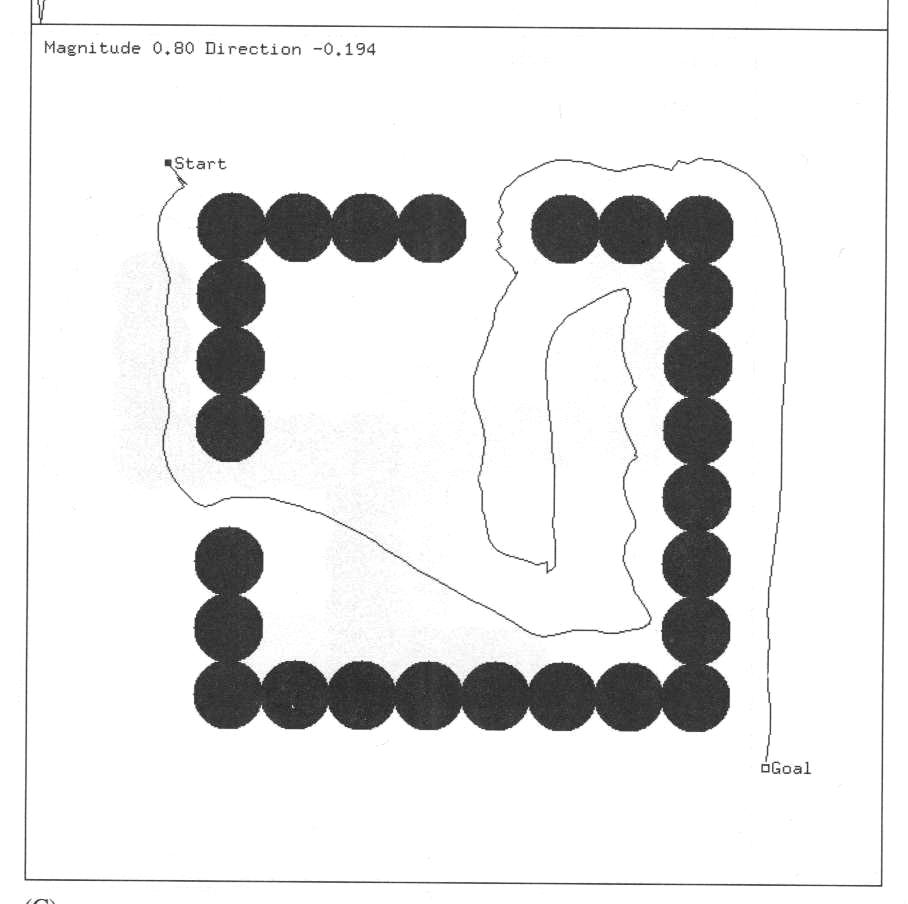

Examples of Fields and their Combinations

- Combination:

- Path taken by the agent:

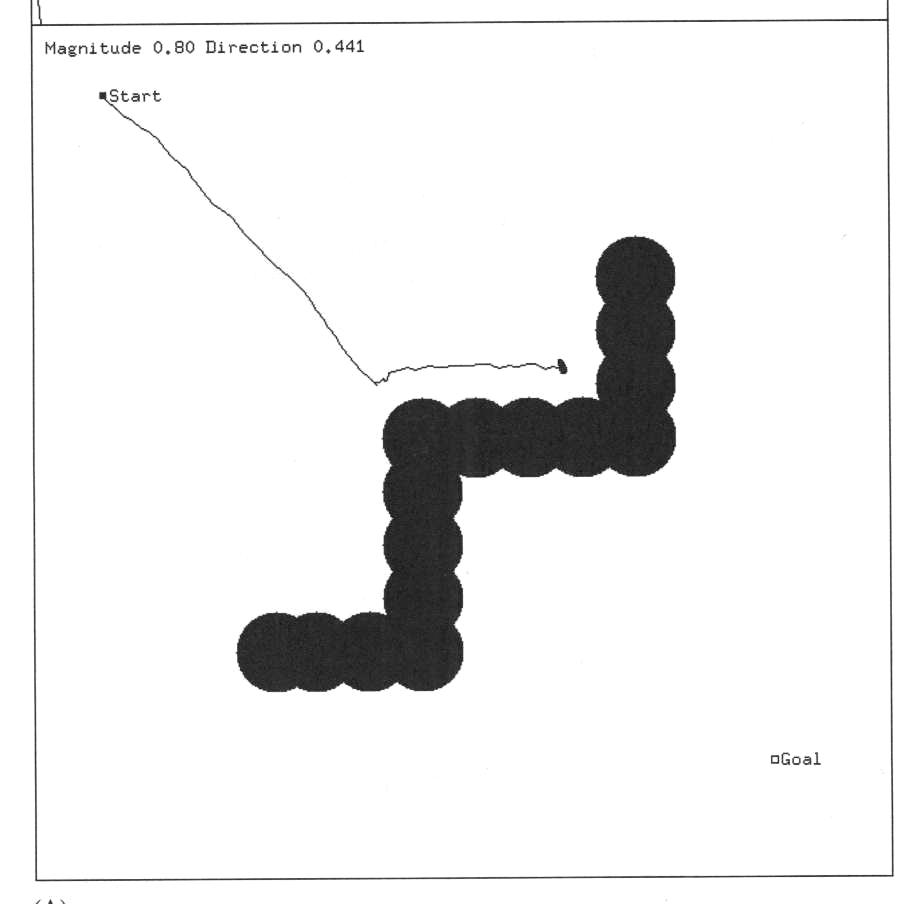

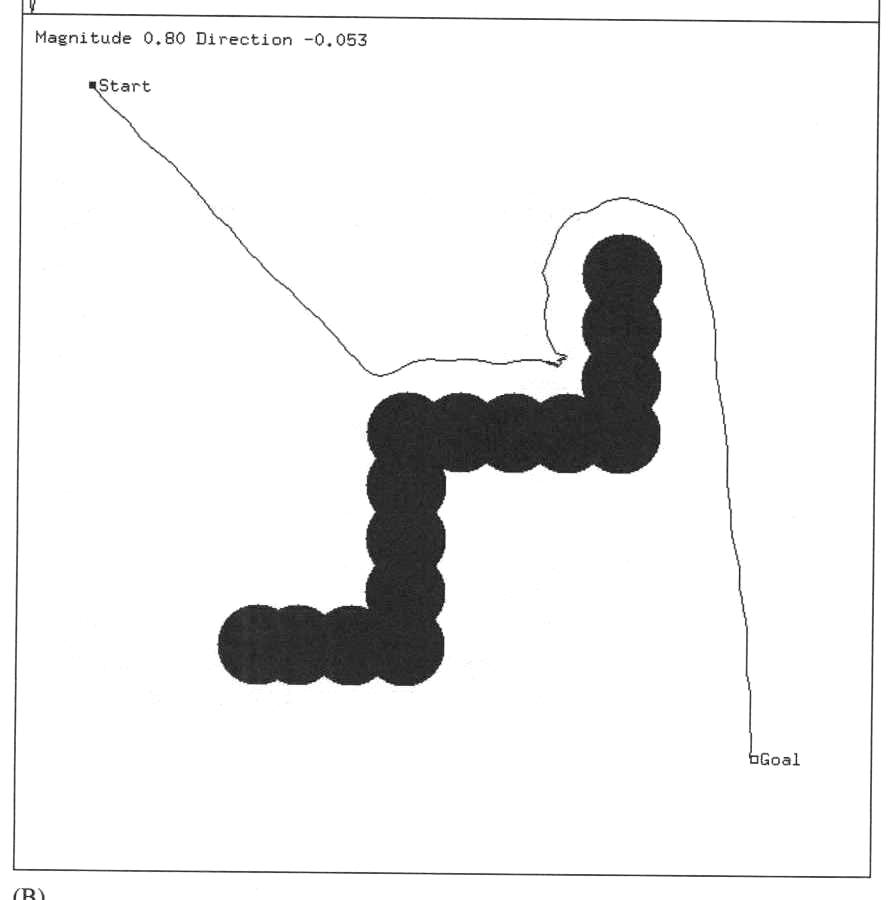

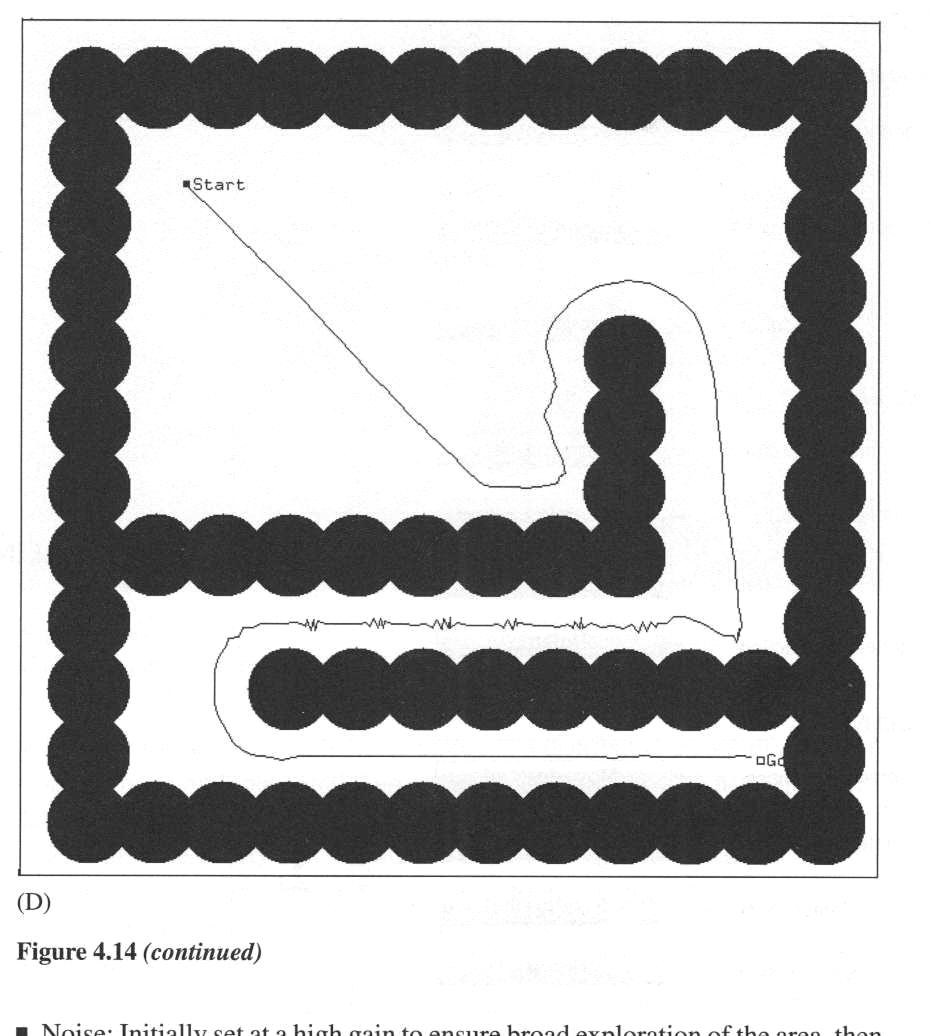

- Corridor following:

- Issue: local minima

- Solution: add random noise!

- To get out of larger wells:

- Allows agents to traverse mazes, for example:

From Reactive to Deliberative Architectures

Extending Reactivity

- First, why do we need to extend it in the first place?

- Claim: every robot behavior can be implemented solely in reactive

behaviors--do you believe this?

- Because we want to improve the performance of the agent (i.e., add new

capacities), make it "smarter"

- In what directions can we/do we want to extend reactive behaviors?

- Remember: "avoid-past scheme", to keep track of past locations without

explicitly keeping track of environmental states

- Analysis: what exactly did we keep track off?

- Hence, one possible extension: explicitly allow for "internal states" to

keep track of environmental states-->memory!

- Distinguish: short term vs. long term memory

- More generally: allow for "internal states" to "stand in" for something

else-->representation!

- Careful: this is a very complex notion, lots of dispute in philosophy

alone about it (and different disciplines use the term "representation" in

very different ways)

- Also note: state /= representation

Representations

- Why representations?

- E.g., because they allow us to acquire knowledge and use reasoning

methods (e.g., to predict future developments or infer facts that cannot be

observed)

- What are other reasons?

- What is required for representation?

- Systematic correlation of a virtual machine state with another state

(either in the environment or within the agent)

- Why not "state in the architecture"?

- Note: maintaining such a correlation is not trivial--what is involved?

- What to represent?

- "Location" (of the agent or of a landmark in the environment)

- E.g., use "triangulation" from known landmarks to locate yourself or

other objects

- Prerequisite to making maps

- Evidence from biology that lots of animals are capable of

localization (using triangulation

- Also: animals use "cognitive maps"

- Distinguish: agent-centric coordinate system - world coordinate system

- In general: want to represent knowledge (about the world)--why?

- Remember the different kinds of "knowledge" we distinguished earlier?

- implicit vs. explicit

- positive vs. negative

- declarative vs. procedural

- innate vs. acquired

- direct vs. indirect

Maps

- Why are maps advantageous?

- Short term maps

- Improve perceptions (e.g., save recent sensory readings and use them to

constrain what could be "out there")

- Use for navigation or manipulations of objects

- Behavioral memory: keep track of sensor readings (in reasonably "stable"

environments), typically using "grid representations" of the space around

the agent

- Distinguish different kinds of grid representations:

- Long term maps

- Represent "knowledge" of environment

- Different encoding methods:

Summary

- Main extensions:

- short and long-term memory components (e.g., maps containing landmarks)

- representational capacities (e.g., of states in the environment, or

states of the agents itself such as "goals")

- why can it be advantageous to represent one's own goals?

- methods that operate on representations

- planning components (e.g., AuRA, Atlantis, Planner-Reactor, etc.)

- this is what most BB architectures have focussed on--why?

- reasoning components (e.g., BDI architectures, PRS, etc.)

- reflective components (e.g., probabilistic modal logics plus belief

nets, Koller et al.)

- Hybrid architectures (see section 6.6 in the Arkin book for a nice

overview)

- typically: reactive + deliberative

- better: reactive + non-reactive

- biological evidence: contention scheduling (Norman and Shallice, 1980,

Cooper and Shallice, 2000)

- often: layered ("layers of competence")

- layers may model time frames

- immediate: reactive

- short-term: action sequences

- long-term: deliberation (e.g., with explicit goal representations)

Case Study: Contention Scheduling

- Three layers:

- supervisory system

- schema layer

- schema network

- object network

- resource network

- low-level motor actions

- Influences:

- top-down excitation

- lateral inhibition

- self-influence

- external influence

- random

- Intended to model human action sequencing ("coffee making example")

Copyright © Matthias Scheutz,

2005